7. Analytical Solutions II#

7.1. Finding general solutions of linear homogeneous systems#

Let’s begin with a two-dimensional or planar system. We’ll keep things simple again and focus on autonomous, linear and homogeneous ODEs.

Example 7.1 (Damped oscillation)

Again, think of Example 3.2 so you don’t feel like being bounced around too much!

Consider the system:

To motivate things, let’s go to our concrete example in Equation (7.1).

An easy one will be when \(\mathbf{A}\) is a diagonal matrix. In the example in Equation (7.1), this means \(a_{12} = a_{21} = 0\) and that the two equation are decoupled. They are two scalar ODEs where the variable \(x_{1}\) and \(x_{2}\) do not affect each other. We already know how to solve these scalar equations!

What if \(a_{12} \neq 0\) and/or \(a_{21} \neq 0\)? The solution will depend on the properties of the linear map or matrix \(\mathbf{A}\) and this is summarized by its eigenvalues.

There are three cases to consider:

Distinct real eigenvalues

Complex eigenvalues

Repeated real eigenvalues

7.1.1. Eigenvalues and eigenvectors#

Why three cases?

Let’s take a quick pit stop to refresh ourselves on the concepts of eigenvalues and eigenvectors.

Consider a linear operator, transformation, or map \(T\) that has inputs or arguments \(\mathbf{x}\). We write this map as \(\mathbf{x} \mapsto T(\mathbf{x})\).

A special instance is where \(T\) has a finite-dimensional co-domain, just like the case of Equation (7.1). In this case, \(T(\mathbf{x}) = \mathbf{A}\mathbf{x} \in \mathbb{R}^{k}\).

Definition 7.1

Let \(T\) be a linear transformation from a vector space \(V\) over a field \(F\) into itself.

If \(\mathbf{v}\) is a nonzero vector in \(V\), then \(\mathbf{v}\) is an eigenvector of \(T\) if \(T(\mathbf{v})\) is a scalar multiple \(\lambda \in F\) of \(\mathbf{v}\), i.e.,

A scalar \(\lambda \in F\) is called an eigenvalue or characteristic root associated with \(\mathbf{v}\).

For our purposes, \(F\) can either be \(\mathbb{R}\) (the set of real numbers) or \(\mathbb{C}\) (the set of complex numbers).

Coming back to our special case of Equation (7.1), an eigenvalue-eigenvector pair would satisfy the system of equations:

Rearranging this, we have the condition

Example 7.2 (Two variables)

\(T(\mathbf{v}) = \mathbf{A}\mathbf{v}\in\mathbb{R}^{2}\).

We can write out Equation (7.4) verbosely as the system of equations:

If we fix a scalar \(\lambda\), then we have two equations determining the two unknowns that make up an eigenvector \(\mathbf{v} = (v_{1}, v_{2})\).

So you ask, what is an eigenvector?

Let’s try to visualize what Equation (7.2), or its finite-dimensional counterpart in Equation (7.3), is telling us.

An eigenvector is a particular non-zero vector \(\mathbf{v}\) such that if we transform it under the linear map \(T\), we are merely stretching it in the direction of the transformation (given by the sign of \(\lambda\)) and the magnitude of the lengthening or shortening depends on the absolute size of \(\lambda\).

We can also see immediately that the eigenvector is not rotated; so \(\lambda\mathbf{v}\) is also an eigenvector.

We will send this little geometric piggy to market soon. It will pay us dividends when we need to describe the solution of particular classes of ODEs that admit analytical solutions.

What about an eigenvalue?

Up to now, we have mentioned eigenvalues and how they are associated with eigenvectors.

Let’s fix ideas using Equation (7.4). Notice that for a nontrivial solution to this equation, we would require that the matrix \((\mathbf{A} - \lambda\mathbf{I})\) must be singular, or,

This is called a characteristic equation of \(\mathbf{A}\).

If we work out the determinant on the left, that is going to be a polynomial equation in \(\lambda\).

Example 7.3 (Two-variables and characteristic equation)

Consider Equation (7.5) for the two-variable case.

In this case, Equation (7.5) is the zero of a second-order polynomial or quadratic equation in \(\lambda\):

where \(\text{tr}(\mathbf{A}) = a_{11}+a_{22}\) and \(\det(\mathbf{A}) = a_{11}a_{22}-a_{12}a_{21}\).

An eigenvalue or characteristic root \(\lambda\) will be a scalar that satisfies (7.6).

From the example above, we could see that there might be three cases to consider:

Distinct real eigenvalues

Complex eigenvalues

Repeated real eigenvalues

7.1.2. Distinct real eigenvalues#

Mini roadmap

Let’s do the linear algebra lifting and then later, we will connect the algebra to the geometry of the solutions in the state space view.

We’ll use a well-known fact from linear algebra.

Theorem 7.1

Let \(\lambda_{1}, ..., \lambda_{k}\) be \(k\) distinct eigenvalues of the \(k \times k\) matrix \(\mathbf{A}\). Let \(\mathbf{v}_{1}, ..., \mathbf{v}_{k}\) be the corresponding eigenvectors—i.e., \(\mathbf{A}\mathbf{v}_{i} = \lambda_{i}\mathbf{v}_{i}\) for every \(i=1,...,k\). Then \(\mathbf{v}_{1}, ..., \mathbf{v}_{k}\) are linearly independent.

If \(\mathbf{A}\) has distinct and real eigenvalues, then we can write

where \(P = [\mathbf{v}_{1}, ..., \mathbf{v}_{k}]\) is a \(k \times k\) matrix whose columns are the \(k\) linearly-independent eigenvectors, and,

is a diagonal matrix of the eigenvalues.

Now, since \(\mathbf{P}\) has linearly independent columns, it is non-singular and hence, invertible. That is, we may write

That is, we can diagonalize \(\mathbf{A}\).

How is this fact useful?

Recall that we said if \(\mathbf{A}\) is not a diagonal array, the system of ODEs is interdependent. Straight off the bat, we may not know how to solve this.

However, what if we can exploit the fact that \(\mathbf{A}\) is diagonalizable? That is, what if it has distinct real eigenvalues?

If (7.1) is such that \(\mathbf{A}\) has distinct real eigenvalues, then by Equation (7.8) we can re-write it as

Pre-multiply both sides of the last equation by \(\mathbf{P}^{-1}\), we now have

The last equation can be re-written as

What is the point of this change of variables, \(\mathbf{y}=\mathbf{P}^{-1} \mathbf{x}\)?

Observe that instead of solving a coupled ODE system in Equation (7.9), we can equivalently solve the decoupled system in Equation (7.10) for \(\mathbf{y}(t)\). The latter is just a stack of scalar ODEs that do not affect each other: We know how to solve these already!

Geometrically, we have performed a particular rotation on the original vector of state variables—by the linear map \(\mathbf{P}^{-1}\)— such that we can characterize the solution of each variable \(y_{i} \in \mathbf{y}\) along the \(i\)-th axis. This solution is perpendicular or orthogonal to, or independent of, the solution of the other variables \(y_{j} \in \mathbf{y}\) along all other \(j\)-th axes.

The solution for Equation (7.10) is

Since \(\mathbf{y}=\mathbf{P}^{-1} \mathbf{x}\), then we can recover the general solution of the system in Equation (7.9):

What Equation (7.11) tells us is that the general solution is a linear combination of “straight-line solutions”.

There is quite a beautiful geometry behind how one goes about constructing this formula.

Let’s stare and think about this for a bit:

Each scalar term \(c_{i}e^{\lambda_{i}t}\) scales (i.e., lengthens or shortens) the associated eigenvector \(\mathbf{v}_{i}\) depending on \(t\). Scaling does not affect its property as an eigenvector.

The infinite set of points \(\{e^{\lambda_{i}t}\mathbf{v}_{i} \in \mathbb{R}^{k}: t \in \mathcal{T}\}\) is a “straight line” that passes through the origin in the state space.

The sign of \(\lambda_{i}\) determines the resulting direction of the vector (straight-line solution) relative to the origin.

The eigenvectors themselves define rotations of the bases of the state space about the origin.

Thus, for each instance \(t\), we can write down the outcome of (or a point on) a general solution curve as a linear combination of straight-line solutions with weight \(c_{i}\). If we do this for every \(t \in \mathcal{T}\), we would be tracing out the solution curve using Equation (7.11).

Let’s return to our planar ODE in Equation (7.1) for a few demonstrations.

Example 7.4 (Two-variables and Superposition)

Suppose we have an ODE as in Equation (7.1) and

The general solution is

Exercise 7.1

Construct the solution curve for the ODE in Example 7.4 that begins from initial value \(\mathbf{x}(t_{0}) = \mathbf{x}_{0} = (0.1, 0.1)\).

Hint: You can do this by hand or use a computer!

Geek out: If you code this up, could you try animating how you constructed points along the solution curve!

Example 7.5 (Damped oscillator)

Suppose we have an ODE as in Equation (7.1) and

This is another instance of Example 8.3 (the damped oscillator) where \(k=10\), \(b=7\) and \(m=1\).

Exercise 7.2

Construct the solution curve for the ODE in Example 7.5 that begins from initial value \(\mathbf{x}(t_{0}) = \mathbf{x}_{0} = (1, 1)\).

Hint: You can do this by hand or use a computer!

We can now summarize the solution description of autonomous, linear, first-order ODEs: the stuff we have just discussed in this part.

Theorem 7.2

If \(\mathbf{y}(t)\) is a solution of the system (7.9) such that

\[ \dot{\mathbf{y}}(t) = \lambda \mathbf{y}(t) \]for all \(t\) and some constant \(\lambda\), then every \(\mathbf{y}(t)\) is an eigenvector of \(\mathbf{A}\) with eigenvalue \(\lambda\).

Suppose that \(\lambda\) is a real eigenvalue of the matrix \(\mathbf{A}\), with associated eigenvector \(\mathbf{v}\). Then \(\mathbf{y}(t)= e^{\lambda t}\mathbf{v}\) is a solution of the system (7.9).

Suppose \(\mathbf{y}_{1}(t), . . . , \mathbf{y}_{k}(t)\) are solutions of the linear system (7.9). If \(\mathbf{y}_{1}(t), . . . , \mathbf{y}_{k}(t)\) are linearly independent, then

\[ \mathbf{x}(t) = c_{1}\mathbf{y}_{1}(t) + \ldots + c_{k}\mathbf{y}_{k}(t) \]is the general solution of the system.

Exercise 7.3

Prove Theorem 7.2.

This is quite a remarkable theorem. In general, an ODE’s solution involves integration and that can be quite tricky, non-analytical business!

What Theorem 7.2 tells us is that, for this class of linear ODEs with distinct real eigenvalues, there is a very powerful geometric insight. This geometric approach says that all we need is linear algebra: Compute the eigenvectors and eigenvalues. Given these, we can write down the general solution, as in Equation (7.11) using pencil and paper!

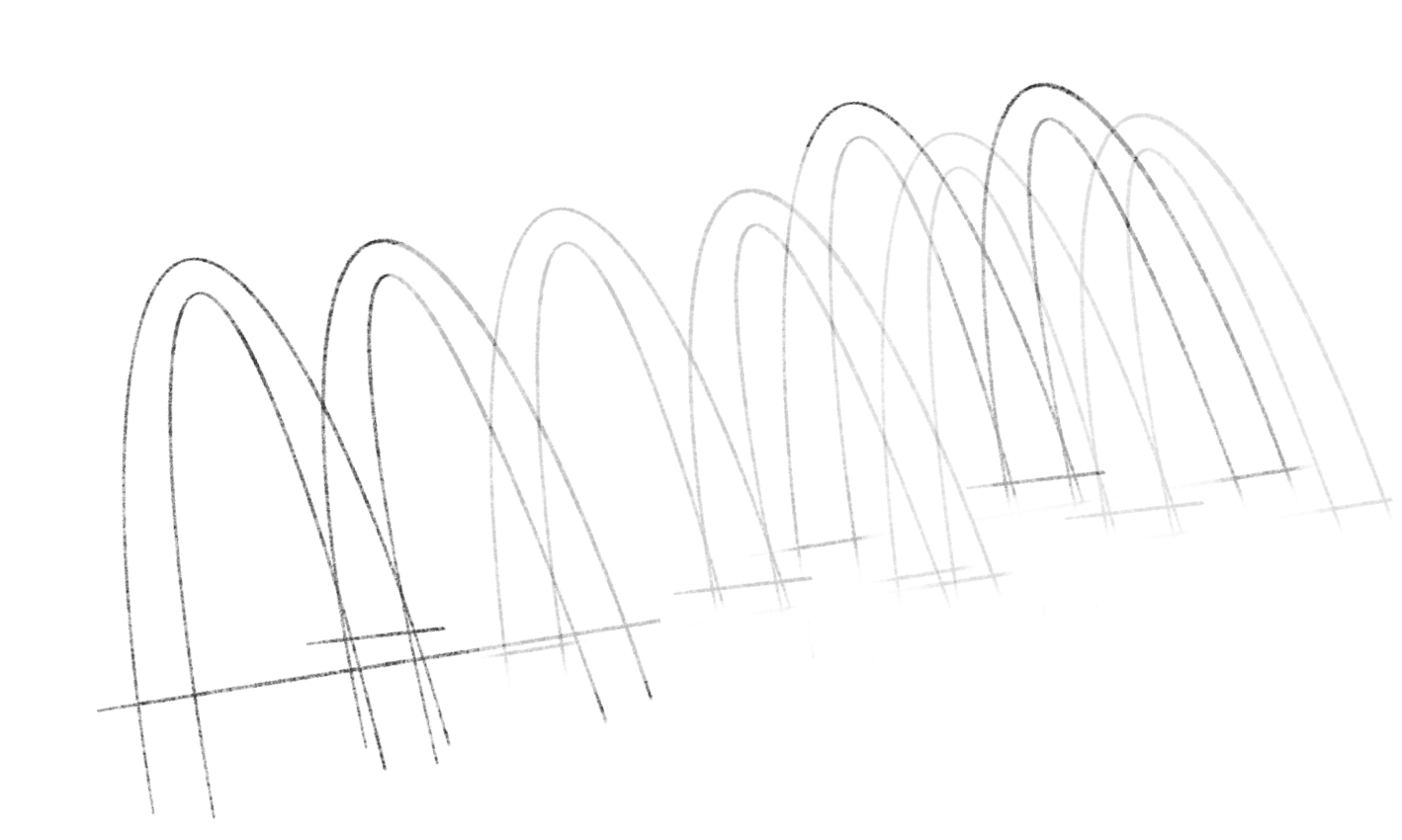

7.1.3. Complex eigenvalues#

Unfortunately, when the eigenvalues of \(\mathbf{A}\) are complex, we lose the simpler nature of solutions of Equation (7.9) as a linear combination of simple, straight-line solutions.

Let’s focus on the planar or two-variable example. The same insight can be extended to higher dimensions of variables.

When there are eigenvalues that are complex, they will appear as complex conjugates of each other,

and \(i=\sqrt{-1}\) is called an imaginary number. The numbers \(\alpha\) and \(\beta\) are real numbers. Thus the first term of \(\lambda_{1}\) is called the real part and the second term, the imaginary part of the eigenvalue. Likewise, for \(\lambda_{2}\).

The eigenvalues’ respective associated eigenvectors are also complex conjugates of each other:

where the vectors \(\mathbf{u}\) and \(\mathbf{w}\) are real valued.

The general solution is still of the same form as in Theorem 7.2 or Equation (7.11), except that it will not be a linear combination of “straight line solutions” anymore.

In this two-variable example, we write the general solution as

Euler’s formula allows us to write

Since \(e^{(\alpha + i\beta)t}(\mathbf{u} + i \mathbf{w})\) and \(e^{(\alpha - i\beta)t}(\mathbf{u} - i \mathbf{w})\) are complex conjugates of each other, we can choose \(c_{1}\) and \(c_{2}\) to be complex conjugates of each other, so that the implied solution in Equation (7.12) will be real-valued:

where \(C_{1} = 2c_{1}\) and \(C_{2} = 2c_{2}\).

7.1.4. Repeated real eigenvalues#

In Section 7.1.2 we have seen that if \(\mathbf{A}\) is diagonalizable if it has \(k\) distinct real eigenvalues.

What if this is not the case?

Let’s again work with the two-dimensional case, \(k=2\).

If \(\mathbf{A} \in\mathbb{R}^{2}\times\mathbb{R}^{2}\) is a real-valued matrix with repeated or equal eigenvalues, then there can be only one independent eigenvector \(\mathbf{v}\).

In this case, \(\mathbf{A}\) is not diagonalizable, but we can come close to diagonalizing it. There is a generalized eigenvector, \(\mathbf{w}\), such that

and,

Now, we can do a change-of-variables linear transformation by defining a matrix \(\mathbf{P} = [\mathbf{v} \ \mathbf{w}]\), where \(\mathbf{A}\) is decomposed as

This will render the transformed system a recursive one.

Exercise 7.4

Derive the recursive ODE system.

The general solution can be shown to be

Exercise 7.5

Derive the last general-solution formula.

7.2. More remarks#

There is more to study in terms ODEs.

For example, there are special classes of nonlinear ODEs that also admit analytical solutions. We won’t delve into them for now. The interested student should read the rest of Chapter 2 of Léonard and Ngo [15] and Chapters 23 to 25 of Simon and Blume [28].

To pique interest, recall the example of a nonlinear ODE from Example 3.3. Try this one on for size!

Exercise 7.6

In Example 3.3, we said that the class of constant relative risk aversion (CRRA) preference functions satisfies

We can define a change of variables by \(x(c) = U'(c)\) and re-write this as a first-order, separable nonlinear ODE:

Show that the general solution has the familiar CRRA power-function formula,

where \(A\) and \(B\) are arbitrary constants of integration.

Also, one might be interested in analyzing nonlinear ODEs from a global dynamic perspective. The rest of Chapter 2 of Léonard and Ngo [15] deals with this. See also Weber [33] for a more rigorous treatment with economic examples.